I want to start out talking about how clinical trials should be perceived. When you spend you entire life in the medical field and there are decades of standards for “evidence based medicine” it is really hard to step back and really reflect if those standards are really most appropriate.

The most human attitude is “This is the way things have been done. Who am I to question them?” Well meaning clinicians can thus make systemic errors in judgement because they are thinking in the predominant paradigm and that is always what “evidence based medicine” means to them. The fact that most thought leaders become thought leaders because they receive grants and consulting fees to fund their ground breaking research from pharmaceutical and biotech industry makes them even more likely to accept the paradigm. They have no incentive not to.

The pharmaceutical industry also largely funds most of the major medical associations through ads in their magazines and exhibit booths at their conventions. Do you think the American Medical Association is truly unbiased? How can they possibly be when the medical industry is responsible for large parts of their budgets? These associations set the standards of practice.

The medical journals themselves are funded by pharmaceutical ads. So can we really trust them to be unbiased purveyors of information? The FDA itself also receives a lot of money from pharmaceutical user fees, before you even mention the fact that agency heads are appointed politicians who receive tons of money from the pharmaceutical industry giants. I could actually go into much more details on the damning evidence of how regulatory bodies and medical associations are captive to industry, but that is not the subject of this article.

I just want to bring to everyone’s attention, especially established clinicians, that their view of how clinical trials works might be somewhat colored by paradigms influenced by those with a financial interest of certain products coming to market so you will read this with an open mind. I expect what I say here to be controversial because I understand how trials are traditionally looked at.

Clinical Trials 101

For those of you who are not medical researchers, the idea of a clinical trial is pretty basic. The best evidence for anything medically speaking are large multicenter randomized double(triple)-blinded placebo(active)-controlled trials with a pre-specified clinically meaningful primary endpoint to prove a hypothesis you are testing. If you do not understand these terms, I plan on explaining this further in another article

To simplify things, you have one or more groups of people where you are comparing one treatment to another (often a placebo - an inactive pill) to look for differences in the outcomes. There is usually one large question you are asking, typically around the efficacy of the treatment. This is the so-called primary endpoint which represents a way of testing the primary hypothesis you want to prove. For example, you might want to prove the hypothesis that a vaccine results in fewer COVID-19 infections versus control, so you rigorously define an infection and check for differences in the treatment group and the control group after a pre-set number of months or events (positive COVID-19 cases).

Ideally you should see a difference between the two or more groups of patients tested if your hypothesis is correct. The study only represents a sample of what you will see in the overall population. It will usually not represent the exact results if you treated everybody in the world who could receive the treatment under roughly the same circumstances as there is usually “sample error.” The more patients in the trial, the closer the sample will be to the “real number.” You look at the number of patients in the sample and the difference between the patient groups to estimate the probability that the hypothesis you are testing is correct based on the sample you have.

For example, in the Pfizer COVID-19 jab trial, the primary endpoint was “COVID-19 incidence per 1000 person-years of follow-up in participants without serological or virological evidence of past SARS-CoV-2 infection before and during vaccination regimen – cases confirmed ≥7 days after Dose 2” (see page 13, VRBPA FDA Briefing Document Pfizer-BioNTech COVID-19 Vaccine)

Translating that to English, they were comparing the absolute rate of COVID-19 infection by dividing the number of people infected by the amount of total time (i.e. patient-years) patients in each group in the trial were exposed to the treatment. You cannot just compare the absolute numbers of infections in both arms because you might have less time of follow up in one arm versus another. The more time you have followed a person, the more total risk they have of coming down with COVID-19. In a well randomized study, usually both arms are close without looking at rates anyway, but there was a slight difference in this study because Pfizer was sending letters to placebo patients telling them they could take the vaccine after EUA approval. As a result, we have slightly more follow-up for the vaccine groups “at six months” at 3.7 versus vs. 3.6 months (calculated later in article).

“Without serological or virological evidence of past SARS-CoV-2 infection” is basically saying they only want to look at people who did not already have COVID-19 because (1) those people are likely to be immune to catching it again and will artificially lower the infection rate in whatever arm they are randomized to and (2) they want to exclude people who get COVID-19 after the first dose because they believed the vaccine might only truly effective after dose 2, so including them would in their opinion artificially lower the pure efficacy of the vaccine that they wanted to measure. This period was extended past the second jab “ ≥7 days after Dose 2” because presumably dose 2 might take a few days to start to have its effect and you did not want to include any infections that might be acquired and unsymptomatic in that timeframe. The greater than or equal than 7 days allowed them to account for this possibility.

So this has a sound logic to it in terms of maximizing the efficacy seen in the trials of the vaccine. However, I have at least three reasons this was a horrible choice of primary endpoint that I will discuss in another article, but it was the actual primary hypothesis of the trial.

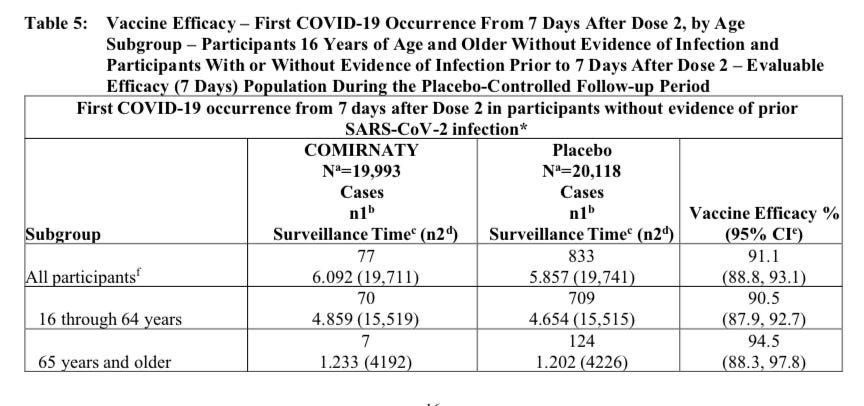

At the “six month” analysis, 77 patients came down with COVID-19 in the vaccine group (over 6,092 patient years) versus 833 in the control group (5,857 patient years). The incident per 1,000 patient years was 77*(1,000/6,092) = 12.63 cases per 1,000 patient years versus 833*(1,000/5,857)=142.22 cases per 1,000 patient years in control. That represents a 12.63/142.22-1 = 91.1% reduction in the incidence of COVID-19. This is quite an impressive result. By doing some math, statisticians can say the probability of the real world effect has a 95% chance of between 88.8% and 93.1%. This 88.8% to 93.1% range is called a “confidence interval.” You can find these numbers from the label in the following table on the first line to see how this data is reported to physicians in real life:

Source: COMIRNATY Package Insert, Table 5, Page 16.

For your information, to get the average months of treatment in the vaccine arm by taking the 19,711 total patients in that analysis (I am using the number in parentheses because that is the number of patients at risk for the endpoint which is a fraction of the total 19,993 patients in the group) and divide that into the total patient years and multiply times twelve months in a year 6,092*12/19,711 = 3.7 months and for control 5,857*12/19,741 = 3.6 months. Note the total time on study is greater than this (4.5 vs 4.3 months) because the safey evaluation carries all the way back to dose 1, while the efficacy analysis starts 7 days after dose 2, roughly one month apart if scheduled according to plan (and things do not always go according to plan with scheduling in a trial). Both are well below “six months” which is the reason I like to put quotes around “six months” when I reference the “six month data.” We actually do not have that much blinded follow up on the entire study population, and probably never will. Only 54.5% of patients (12,006) actually had at least 6 months of follow up (page 12, Unsolicited Adverse Events, COMIRNATY Package Insert).

Because the hypothesis was that the vaccine was better than placebo in terms of preventing COVID-19, i.e. greater than a 0% reduction in the incidence of the disease, 88.8% being far off from 0% makes this highly statistically significantly significant. We represent this by the “p-value” which represents the chance that this result is random noise in the study. In this case the p value is almost non existent (p is much less than 0.00001; you can add a bunch of 0’s to that expression), making it a virtual certainty of a treatment effect. There are many different ways of calculating a p-value based on the type of primary endpoint and the assumptions about how the data is distributed.

The general standard for most trials is a 5% chance that is considered reasonable enough to be a success. By this standard, you should expect 1 out of every 20 trials that achieve a p <0.05 to be random noise. You can show statistical significance to “prove a hypothesis” and the hypothesis can still be incorrect. This is why primary endpoints are specified in advance of the trial. The more you look through the data, the more likely you are to find one of these random 1 in 20 events. This is also one reason why the FDA has a history of requiring two positive randomized phase III registration studies for new pharmaceuticals given to large populations. The odds of two barely significant phase III trials being a fluke is only 0.05*.05 = 0.0025.

This is process of looking through the data to see other relationships is called “subset analysis.” It is useful to do to look for relationships to form new hypotheses to be confirmed in other trials. However, generally speaking, you do not strictly have something proven in a formal sense, unless it is the subject of its own trial. Unless of course, the p-value is so low, like the Pfizer one, that you know there is nearly a certain probably it was not random noise. The magnitude of the p-value is important to the interpretation of the data.

Also, it is possible you can do a trial on a drug that works and not achieve a positive trial result due to random noise. The more patients you enroll, the more likely you will get a meaningful result closer to the real answer. As a result, pharmaceutical companies assume a specific treatment effect, for example a 50% reduction of COVID-19 infection, and then chose a number to power for a certain percentage probability of showing a treatment effect if it is that exact amount. If the treatment effect in reality is higher, then the odds go up. But if a trial is powered to achieve a 80% probability of success given the assumed treatment effect is accurate, then one out of five (20%) clinical trials will fail for that drug, even if it works!

What About Safety?

This is the big problem with clinical trials as you are not only interested in the primary endpoint of efficacy. Safety is almost never incorporated into the actual primary endpoint which is the main lens through which any trial is viewed. While safety is certainly evaluated, it is not treated with the same analytical rigor as the primary endpoint. And there are reasons for that. Theoretically speaking, you should expect some completely random p-values of 0.05 (1 out of every 20 individual side effects that achieve this value that you look at), so some people might tend to take such results with a grain of salt if they hover in that range.

Individually side effects tend to occur with much lower frequency than the primary benefit. And in many cases, this is the most important reason that they can in reality be minimized, the treatment effect outweighs the potential side effects even if you know they are significant. But since the trial is not powered to find statistical differences in side effects, but differences in the primary efficacy endpoint, you are not going to have enough patients to “prove” a side effect “real” with a p-value less than 0.05. And a pharmaceutical company is going to make a strong case to discard any side effect with p-value of 0.05 as “potential noise.” The larger better done studies like the Pfizer COVID-19 trial can detect some of these less frequent events when viewed in aggregate, as we will see later in this blog. But, statistically it is nearly impossible to prove “safety” in even the largest trial since a very rare and debilitating event Guillain-Barré could happen at such a low frequency it is not observed at all in smaller trials and seen at a rate that is statistically significant in larger trials.

It is this statistical reality I want people to realize before they dismiss out of hand potential safety issues. Demonstrating safety, from a statistical point of view, is a hard problem because massively large trials that we as consumers would want to set our minds at ease are impractical.

Additionally, while the therapeutic impact is often singular in terms of targeted positive health outcomes, the side effects can be multiple. The intervention is tailored to stimulate a particular receptor leading to a specific clinical outcome or, in the case of a COVID-19 vaccine, generate a specific immune reaction to prevent COVID-19. Thus this is always the event you are likely to see the most statistically significant result because it is what the treatment is specifically designed to do.

However a treatment injection could lead to an autoimmune response that has different manifestations based on the exact site of the injection, luck, and the genetics/lifestyle choices of the person injected. You could see one of several or several thousand resulting side effects occurring at a very low rate, all not provably significant by themselves, but in aggregate might represent very meaningful risk that outweighs the low absolute meaningful clinical benefit from the therapy. Thus is it not appropriate to look at the side effects in isolation of each other.

A pharmaceutical company has the financial incentive to isolate these effects individually saying they are “rare events” and not statistically significant in of themselves, while the proper analysis is to look at them in aggregate in a true risk benefit analysis.

Understanding Risk Versus Reward

In reality, for most drugs with a clinically meaningful benefit, it is very appropriate to focus on the primary endpoint and just make sure there are no big side effects. A cancer therapy might be significantly toxic, but relative to the risk of death from cancer, considered to be worth the risk, particularly if you have trials that show a statistically significant survival. There is a massive treatment benefit as most of these patients are likely to die if untreated.

However, as you progress to the less sick patients, such as those taking prophylactic (medical speak for preventative treatments) medications to prevent development of a bad medical outcome or completely healthy individuals given a vaccine, this risk calculus becomes a lot more complex and the traditional focus on the primary endpoint and dismissing unlikely side effects is a lot less tenable. The Vioxx/Bextra debacle is the classic example of what can go wrong when infrequent clinical adverse events are not given the appropriate consideration. But the drug division of the FDA (CDER) does a much better job of this, particularly when you are looking at large phase III’s with good meaningful endpoints like survival.

For vaccines it is a completely different story, and this is the reason I have a huge problem with most of them. Vaccines should require the highest standard in terms of safety and efficacy trials since the first principle of medicine is to do no harm and you are treating many people who are not sick and have little risk of major negative health outcome. However, it is not uncommon for cancer studies to have more patients and more substantial follow up than a vaccine.

Let’s take the average flu vaccine. Most are approved every year based on a primary endpoint of an immunogenic response to the vaccine in absurdly few number of patients. Just because you have high antibody titers does not mean it will prevent you from getting a disease. This is what is called a “surrogate endpoint,” something that you have good reason to believe correlates to a desired effect, but does not directly measure it or even prove an effect.

For most pharmaceutical drugs, surrogate endpoints are not allowed. If so, anti cholesterol medications would only have to show a reduction in LDL levels and not prove they prevent cardiac morbidity. If Pfizer were allowed to get away with this, their CTEP inhibitor that raised HDL levels and predictably did nothing for preventing heart disease would probably be approved and selling billions of dollars right now.

In reality most healthy people are not going to even get the flu. Not everyone who has high titers after a vaccine will be immune. And only a small fraction of those that do get the flu are going to have serious or fatal disease. And this is the really important number you need to factor into your risk versus reward decision calculus, not some surrogate endpoint like immunogenecity or even the number of people who just come down with the flu.

Think about it this way. Imagine they came out with a vaccine for the common cold. The pharmaceutical company proves it works and lowers the rate of common cold infections in an actual clinical study by 95%. Let’s say that is from 5% to 0.25% and the difference has a p value of less than 0.00001. Sounds, great right?

But what if I told you there was a 1 in 1,000 chance of even 1 in 1,000,000 chance of getting a rare autoimmune disorder that would cripple you for the rest of your life if you take the vaccine? Some risk takers might take those odds, but I certainly would not. I know my odds of dying from a common cold is non-existent. It is an inconvenience at best. I would worry a lot more about that 1 in 1,000,000 chance of getting the life debilitating autoimmune disorder, which might not be able to even be measured in a 40,000 patient clinical trial and only show up on mass use of the product, not to mention the other side effects no one knows about yet. I guarantee you, all those people who did get Guillain-Barre are regretting it.

With healthy patients, the rule should be “do no harm” first and foremost If there is a serious risk from a disease like COVID-19 what should be examined are the severe outcomes like ICU admissions and death, because avoiding those outcomes is the main reason most people would consider getting the vaccine. This is particularly the case if you do see a significant difference in severe side effects in the vaccine arm versus placebo.

When you look at the severe or serious cases as the true meaningful “treatment effect” of vaccines, the problem you run into is that the treatment effect can be lower than the actual matched severe adverse events! You can no longer dismiss what might be an aggregation of low probably events in the 1% range if the risk of severe disease is less than that. If the number of severe adverse events is greater than the number of severe cases of disease you are treating, then just on that one sample by definition you have a better than 50% chance (the exact amount can be found by applying appropriate statistical analysis) of the treatment being worse than the cure for what really matters – long term harm to your body.

If you just analyze a low intensity primary endpoint (like plain flu incidence) and compare it to low probability severe adverse events like as most people do in most pharmaceutical trials, you miss an extremely important subtlety in the risk versus reward calculation.

For patients who are relatively healthy, where the medication is preventative, the primary endpoint of any study should not just focus on efficacy. It should focus on the more practical measure of whether the important treatment effect outweighs the accumulation of all the side effects. The pure measure of efficacy in terms of mild disease, like catching the flu or COVID-19, should be a secondary endpoint that is certainly important, but not of not as important as that benefit being demonstrably more than the side effects. And if there should be a strong correlation between those endpoints anyway. If the drug works on the primary endpoint, it would be hard to imagine that the secondary endpoint regarding any measure of efficacy alone (severe or moderate) would not also show statistical significance to an even greater extent.

A time to total morbidity endpoint where you have a specific way of grading certain severe events is the best way to evaluate such trials in concept. This provides you are true risk versus reward for the patient who is otherwise healthy. This is the last thing pharmaceutical companies want because they know most of the vaccines that are on the market today would probably not fair so well because the actual real life morbidity of most of these diseases is ephemeral at best. The yearly odds of dying of mumps, measles, or the flu, especially with modern healthcare, would be low for any individual. You would then have to compare that benefit to the many very low probability side effects that in aggregate could overwhelm the risk of treatment effect in regards to severe disease.

This is a point I believe a lot of “vaccine skeptics” are trying to make without often to have the clinical and statistical background to explain it to well meaning physicians in the medical establishment.

Most “thought leaders” are heavily influenced by the paradigm that minimizes severe adverse events in healthy population relative to mild to moderate treatment effects, partly due to the overwhelming influence of big pharma at all levels of the industry, as discussed at the beginning of this article. That allows clinical investigators to focus on vaccines as if they are just another pharmaceutical drug given to sick patients. This is the way things have always been done and evaluated. They do not even question it in a Milgram experiment fashion. No one has an incentive to question it other than consumer protection advocates from the outside looking in and those who have experienced an injury and struggle to receive adequate compensation.

We are using the wrong primary endpoint and decision calculus for vaccine trials in healthy populations, and dramatically biasing the risk versus reward calculation in favor of big pharmaceutical companies. I believe most of the vaccines, when viewed through this lens, would be widely considered bad ideas.

But if the need is really there, like with a serious pandemic with a double digit mortality rate and very high R value, the statistically significant time to total morbidity data should be easy to demonstrate. When the need is not there, as with the 0.009% death rate and 0.13% severe disease rate seen in the Pfizer COMIRNATY clinical trials placebo groups, the trials would probably not even be performed.

After all, based on the data we have just from the COVID-19 data that is public, you have a 41.6% higher risk of getting some severe outcome by taking the vaccine (severe adverse event or severe COVID-19) versus placebo, and the difference in the two arms is highly statistically significant.

In my opinion, based on Pfizer’s own data and no conspiracy theories, no one should be taking this vaccine unless they want to volunteer to be a guinea pig. The medical industry would be better served to focus on treatments to reduce disease morbidity, many of which no one wants to talk about because they are cheap and generic while undermining the argument for vaccinating everyone, making drug manufacturers a lot of money.

Pfizer is the vaccine we know the most about and that has a BLA so I have focused on it. I suspect when we see the final information after the other COVID-19 vaccines are also blindly rubber stamped with a BLA by the FDA, the decision calculus based on their own data will be much the same, before we even talk about the even more serious concerns such as pathogenic priming.